Speaker: Lena Wimmer @LWimmer

Speaker: Lena Wimmer @LWimmer

Affiliation: University of Freiburg

Affiliation: University of Freiburg

Title: Cognitive effects of reading fiction: A meta-analysis

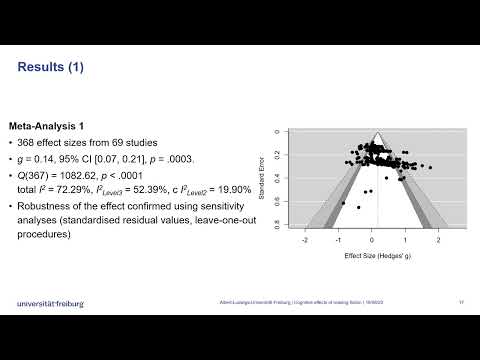

Abstract (long version below): In this project, we meta-analysed experiments examining cognitive effects of reading fiction. A multi-level random-effects model of 368 effect sizes from 69 studies revealed small-sized cognitive benefits of reading fiction, g =0.14, 95% CI =[0.07, 0.22], p =.0003. According to moderator analyses, the comparison conditions ‘no media exposure’ and ‘watching fiction’ were associated with greater effects than the comparison condition ‘reading non-fiction’. Additionally, effect sizes differed from zero for the outcome variables empathy and mentalising only, but not for other cognitive outcomes. However, this body of research does not provide a rigorous test of the assumption that reading fiction causes genuine cognitive changes.

Long abstract

Long abstract

Despite its ubiquity, the psychological effects of reading fiction have been debated: Issues concern the types of psychological outcomes influenced by reading fiction (e.g., whether reading fiction improves social cognition) and about their desirability (e.g., whether beliefs acquired from fiction are on balance more likely to be correct than incorrect). Such debates over the cognitive effects of reading fiction highlight the need for meta-analyses to resolve those debates.

In the present project, we carried out a meta-analysis that significantly updated and extended Dodell-Feder and Tamir’s (2018) meta-analysis on social-cognitive effects of reading fiction. In view of named controversies we synthesised effects on all potentially longer-lasting cognitive outcomes. Following the APA dictionary of psychology (VandenBos, 2015) we defined cognition as all forms of knowing and awareness, such as perceiving, conceiving, remembering, reasoning, judging, imagining, and problem solving. Direct measures of literacy were excluded since we were interested in outcomes going beyond reading ability. We considered experimental studies comparing cognitive skills of individuals assigned to a fiction-reading condition with cognitive skills of individuals assigned to a control condition. We investigated the following research questions:

- What is the synthesised effect size across these experiments?

- Does this total effect size depend on study format (online vs in person; relevant due to differences in participant supervision), sample type (students vs community; important because of differing incentives), type of comparison condition (reading nonfiction vs watching fiction vs no media exposure; relevant to the question whether effects are driven by reading fiction or reading in general), length of reading assignment (if reading fiction impacts on cognition because the cognitive skills relevant to real life are practised during reading, the size of effects should grow with increasing length of reading assignments), outcome variable (empathy, mentalising, moral cognition, general knowledge, outgroup judgements, other thinking processes; important to determine whether outcomes are differently affected by reading fiction), risk of bias (outcome assessment via self-report vs behavioural performance; worthwhile due to lower validity of self-report measures), age of participants (of interest due to cross-generational alterations in reading habits and cognition’s sensitivity to development), gender of participants (in terms of percentage of female participants; reasonable since females have a stronger fiction preference than males)?

Our literature search yielded k =69 studies. Of these, 368 effect sizes were calculated as bias-corrected Hedges’ g , representing the standardised mean difference between fiction reading and the comparison group such that positive effect sizes represent better performance in the fiction group. We implemented a multilevel random-effects meta-analytic model. Overall, this model revealed small-sized cognitive benefits of reading fiction, g =0.14, 95% CI =[0.07, 0.22], p =.0003. Heterogeneity analyses revealed a significant amount of heterogeneity, Q (367)=1082.62, p <.0001. Sensitivity checks confirmed the robustness of findings.

The impact of moderator variables was tested in a series of meta-regression models, with each model including one of the moderator variables mentioned above (see research question 2). Only type of comparison condition and outcome variable were found to be significant moderators.

In particular, the comparison conditions ‘no media exposure’ and ‘watching fiction’ were associated with greater effects than ‘reading non-fiction’. This finding touches on the question of whether effects are driven by reading in general, fiction consumption in general, or the combination of reading with consuming fiction (i.e., reading specifically fiction). The current pattern of results suggests that fiction consumption in terms of watching fiction does not have a share in the effect, whereas the activity of reading does account for a portion of the effect. Beyond this, reading particularly fiction was found to make a distinct contribution.

In addition, effect sizes differed from zero for the outcome variables empathy and mentalising only. So, out of the cognitive skills under investigation only empathy and mentalising seem to benefit from experimental interventions of reading fiction, and even then, only to a very small extent.

The aggregate effect was stable across participant characteristics including gender, age, and type of participant group (students vs. community). Perhaps reading assignments like the ones used within the experiments have the power to overrule their influence. Likewise, study format (online vs. in-person) did not act as a significant moderator variable. It is possible that attention checks, which are commonly included in online studies (to adjust for lack of in-person monitoring), served their purpose.

Interestingly, the length of reading assignments did not emerge as a significant moderator. This result does not support the assumption that reading fiction affects cognition via practising related skills. It should however be noted that the word count of most reading assignments varied between 1,500 and 6,070 words – a single reading task involving approximately 6,000 words may fall short of the length required for longer lasting effects on cognition. Hence, the present body of research does not provide a rigorous test of the assumption that reading fiction leads to genuine changes in cognitive outcomes.