Speaker : Lilla Magyari

Speaker : Lilla Magyari

Affiliation : Norwegian Reading Centre for Reading Education and Research, University of Stavanger

Affiliation : Norwegian Reading Centre for Reading Education and Research, University of Stavanger

Title : The Nature of the ART: challenges in developing an Author Recognition Test for a new population

Abstract : The Author Recognition Test (ART) is a widely used indirect measure of print exposure. In a list of author names, participants are asked to select those that they know to be authors. The underlying assumption is that the more participants read, the more author names they will recognize. The ART is available in just a few languages. In this paper, we reflect on the methodological challenges in developing an ART for a new population, using data from our own study in which we investigate the relation between perceived popularity and literary quality.

Long abstract

Long abstract

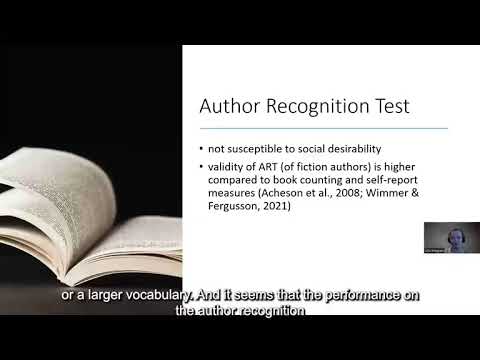

The Author Recognition Test (ART) is an indirect measure of print exposure. In this test, participants get a list of author names intermixed with foils and are asked to select those names which belong to real authors. This test was developed to avoid common problems of self-reports about reading frequency, especially to avoid socially desirable responses. Several studies have validated ART as a good indicator of individual differences in print exposure, i.e., the amount of texts people read. Hence, the assumption is that people who read more, usually recognize more author names.

Despite its popularity in research, ART is available only for a few languages (e.g., in English: Acheson et al., 2009; Mar et al., 2006; in German: Grolig et al. 2020; in Dutch: Koopman, 2015). However, readers with different cultural and language backgrounds have different reading experiences. Hence, an ART developed in one language cannot be used in another population without adjustments. Overall recognition rate can also vary within culture and language if the test is used years later than its development (Acheson et al., 2009; Moor & Gordon, 2015). Therefore, it is important to consider the challenges of determining criteria for compiling lists of author names. The present paper will contribute to a more systematic approach to developing new versions of ART, using a recent Norwegian project as a case in point.

-

ART as a measure of overall reading experience

The assumption behind ART is that even if participants have not read the works of those authors who they recognize, the recognition rate reflects a general experience and interest in reading. This assumption has been confirmed by cognitive measures of reading skills which showed that performance on ARTs correlates e.g., with vocabulary, reading comprehension and speed of word encoding (Stanovich & Cunningham, 1992; Acheson et al., 2008; Moore & Gordon, 2014). This raises the issue of whether general reading skill measures are necessary to validate any ART when it is developed in a new language and whether author list of different genres reflects overall reading experience. While there is usually a high correlation between recognition rates of author lists of different genres, correlation of cognitive measures of reading skills might differ between genres (Osana et al., 2007).

-

Composition of ART including authors of different genres

Depending on the research question, ARTs might be composed of authors of different genres. In relation to the overall reading experience, ARTs based on fiction authors usually correlate stronger with reading-related measures (e.g., in vocabulary, Osana et al., 2007) and also predict actual reading behaviour better compared to non-fiction author lists (Rain & Mar, 2014). One of the most widely used (English) ART used fiction authors and overall performance on this test was correlated with reading-related skills in several studies (Acheson et al., 2009, Moore & Gordon, 2014). However, this list included authors of both popular fiction (i.e., bestsellers) and literary fiction (i.e., fiction considered to be of high literary quality), and such differentiation of authors was also reflected in a correlating two factor-solution analysis of the recognition rates (Moore & Gordon, 2014; Kidd & Castano, 2017). This raises the question of how the overall reading experience relates to subgenres of fiction (varying, e.g., on the degree of literary quality), and whether there is a subgenre that reflects overall reading experience better or whether a mixture of genres is always necessary to include in ART author lists. Moreover, author lists might also contain national as well international authors (Koopman, 2015; Grolig et al., 2020). The international author list could enable comparisons between ART scores and reading preferences of different cultures by providing common items.

-

Categorization of authors in subgenres

Some uses of ART distinguish authors of “high-quality” literature (e.g., classics; critically acclaimed works) from popular authors (e.g., bestsellers), to make use of two subscores in the analyses. However, such differentiation of authors is sometimes done post hoc by the researchers when the results reveal a two-factor solution (Kidd & Castano, 2017).

To create a more expertise-based categorization of “popular” vs. “literary” fiction we asked 25 experts (Norwegian librarians, book editors and literary scholars) about the popularity and the literary value of works of Norwegian and international authors of fiction. Responses yielded a difference between popular fiction and high-quality literature with respect to both literary value and popularity. Moreover, there was a moderate negative correlation between popularity and literary quality (r(23) =-.438, p<0.001). Hence, more literary authors are probably more difficult to recognize. This also raises the issue that subgenre author lists might represent a different difficulty level in the ART.

Based on our theoretical reflections, reviewing of previous studies, and our own experiences with developing a new ART, the paper concludes with recommendations for compiling new measures for new languages, and updating existing ones.